AVM is here - the TLDR on OpenAI's Advanced Voice Mode

Here's the skinny on OpenAI's big announcement, and cool ways people are already using it to do interesting (and sometimes silly) things

Yesterday was a big day as my feed on X quickly became saturated with tweets about OpenAI’s big announcement - AVM, Advanced Voice Mode. While this technically started rolling out in July, almost nobody was included, now to all Plus and Team users. OpenAI announced AVM in a tweet at 11:11am PST yesterday, here’s the tweet:

And yes, the feature you’ve all been waiting for, the ability to say, “sorry I’m late” in over 50 languages. To me, this seemed like a weird selling point since Google Translate has been able to say, “sorry I’m late” (and a lot more) in 243 languages, for a long time now.

That being said, this release is a game-changer in many ways, one being, it’s the next logical step forward as we move towards AGI. Without voice, AGI isn’t going to happen, so this is an absolutely necessary move.

I also strongly believe that this marks the beginning of the obsolescence of the keyboard. Yes, I said it, and I mean it - the keyboard is going away, and we’re all going to feel like dinosaurs for ever using one decades from now.

Here’s a few nuggets about AVM:

currently use of AVM is limited to about an hour a day

it’s available only to Plus and Team users

if AVM doesn’t work for you, close and reopen the app

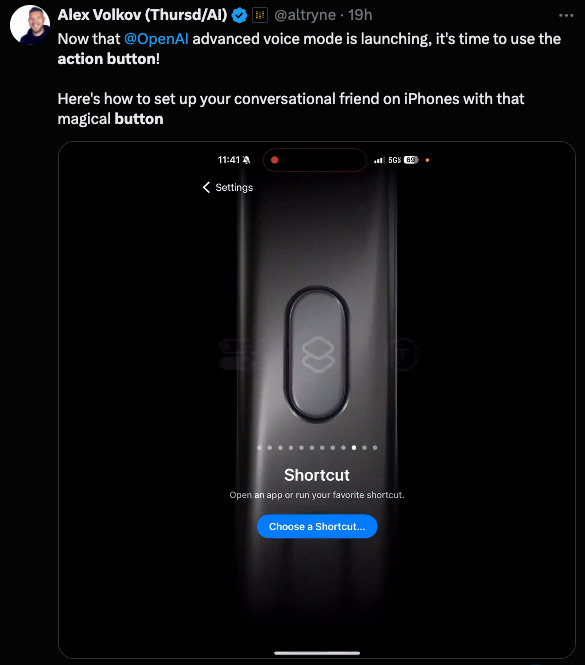

you can install AVM as the action button on the iPhone using Shortcuts

the release comes with five new voices (and removes the Scarlett Johansson voice called “Sky”)

AVM is not yet available in the EU, UK, Switzerland, Iceland, Norway, and Liechtenstein

Of course, with the big announcement also came a flood of examples of how people are already putting AVM to use. Here’s a few that caught my eye:

Voice Pairing Animation from Animation Inc.

Guitar Tuning

Different Presidents ordering a Cheesesteak

(yes, this one is ridiculous but how could I not include it??)

There’s a few other tips worth sharing as you’re diving into AVM. First is to start using Custom Instructions. With Custom Instructions you can tell the model how you’d like it to speak, i.e. annunciate or speak slowly, refer to you by your name, etc.

You can click on the image below to view OpenAI’s tweet about Custom Instructions so you can get this rocking:

And last but not least, you can program the action button on your iPhone using shortcuts. Alex has a great quick video showing you how to do this:

Okay, now that’s it for now, I can’t type any more, time to talk to my new AI overlord. Have a great week everyone and thanks to all my new subscribers, excited to have you onboard!