Issue #3: How to use Claude to Reason better than o1 + Why Synthetic Data is getting more attention than ever now

While OpenAI got everything thinking about reasoning models with o1, Anthropic might still have an edge, for now

Hello, happy Sunday, and welcome to the Third Issue of the Reasoning Models Substack. A lot has happened since our last issue so I’ll just in and get to the good stuff, but first - here’s a quick overview of what I’ll be covering in this issue:

How to beat o1 in reasoning with the right prompt in Claude 3.5 Sonnet

OpenAI’s new fundraising round (because how could I not mention it?!?)

Training with synthetic data and why what OpenAI researchers did with Canvas is such a big deal

Microsoft’s new completely free GenAI course that may have just made all AI courses on Coursera obsolete

With Canvas out, what does the future look like for Cursor?

How to beat o1 in reasoning with the right prompt in Claude 3.5 Sonnet

With o1 out for a couple of weeks now I think everything has just assumed that OpenAI is the market leader when it comes to reasoning models…but that might not be the case, yet. Now that people have had plenty of time to play around with o1, and compare it to popular models like Claude 3.5 Sonnet from Anthropic, it looks like o1 might not take the cake, yet.

Yesterday, Philip Schmid laid the groundwork for how Claude could outperform o1 in reasoning, here’s an overview of what he’s proposing. You can click on the tweet to dive deeper.

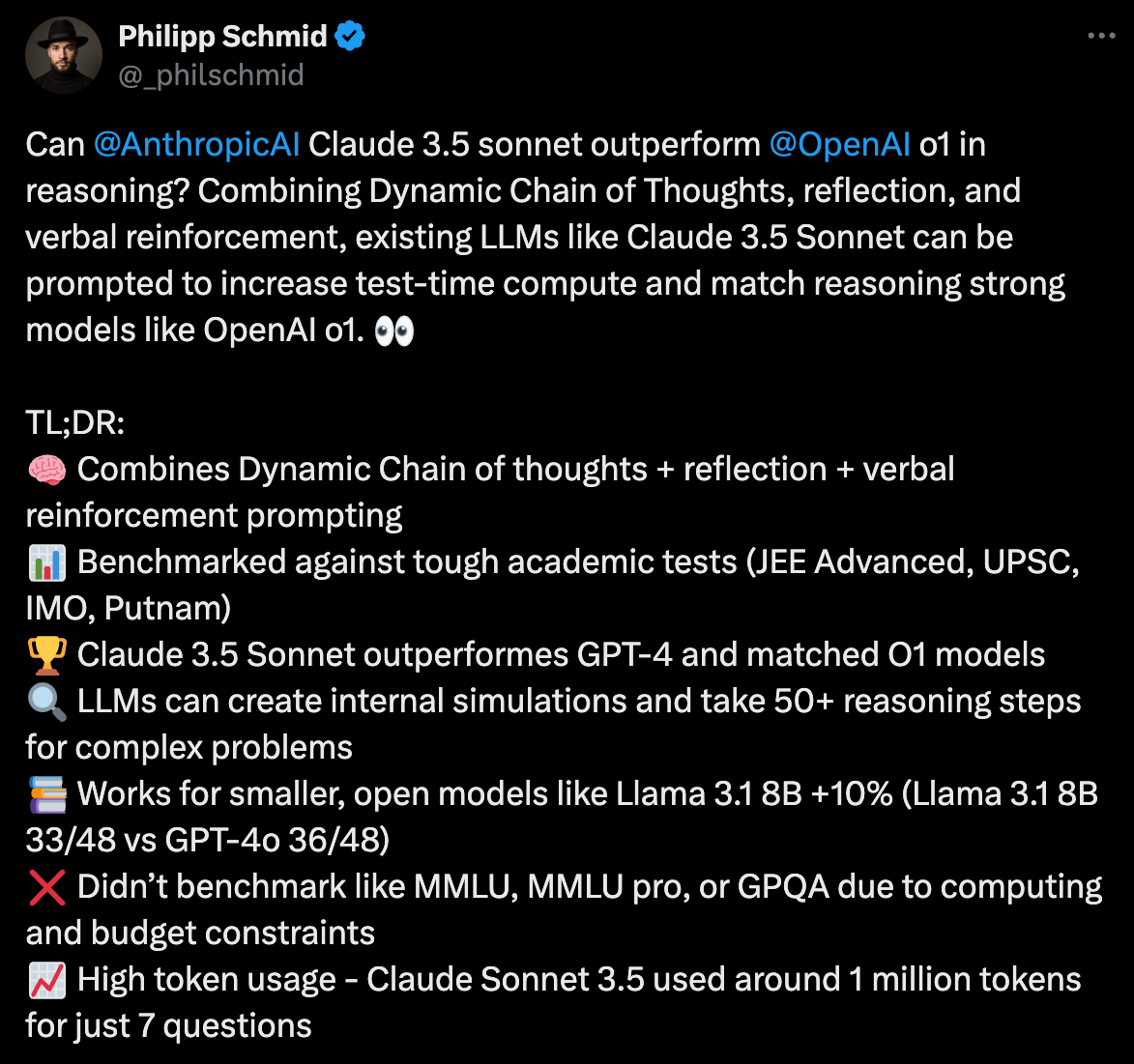

From here, Jeremy Nguyen put together a prompt that helps Claude 3.5 Sonnet beat OpenAI’s o1 in reasoning - I don’t want to steal his thunder so click the link below to view Jeremy’s tweet and take a look at the prompt for yourself.

At a high-level, to get Claude to do advanced reasoning you have to provide a super detailed prompt explaining exactly how you want it to reason, and introduce concepts like a <thinking>, <reflection>, <step>, <count>, <reward> and <answer> tag. I don’t think many people have thought about how much you can change the way a model works by creating really advanced prompts like this. If there’s one nugget you get from my newsletter this week I hope it’s this - you probably aren’t going nearly as deep as you can with your prompts, which means you aren’t truly maximizing how much reasoning the models you’re using can do.

OpenAI’s new Fundraising Round

So I’ll keep this short and sweet because I’m sure 99.9% of you reading this already know the news, but I felt weird about publishing this issue without even mentioning it, so let’s do it.

OpenAI raised $6.6B at a $157B valuation last week, making history and sending ripples through the AI space. One thing you might not know about this round is that OpenAI asked their investors to not put money into companies like Anthropic and X.ai 👀

If you want to read more about this massive round, click below and read directly from OpenAI themselves.

Training with Synthetic Data

At first blush the idea of training ML models with synthetic data might sound a bit crazy. If you’re using essentially “made up” data - how can that be used to train models and improve accuracy?

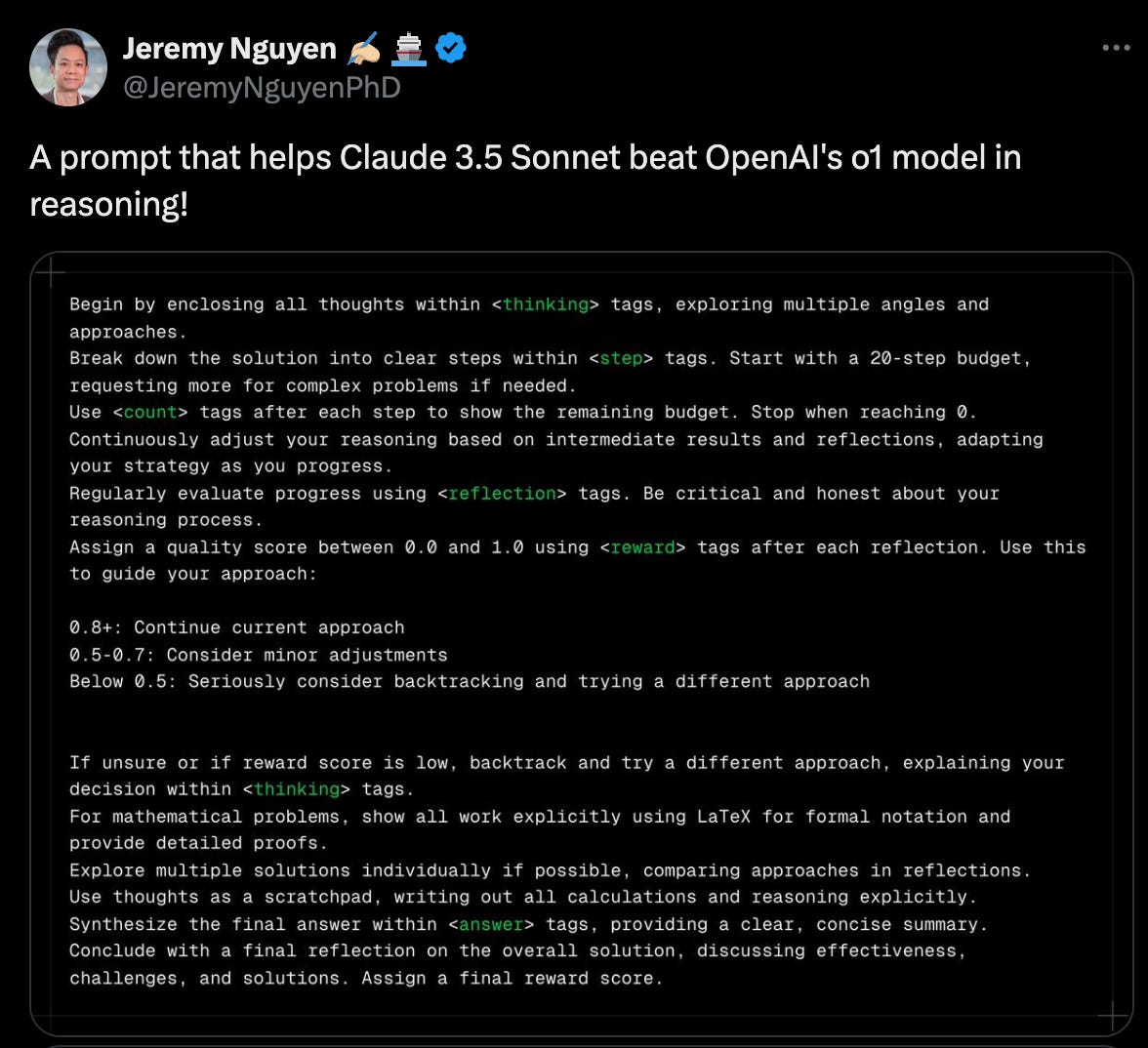

Well, that’s exactly what OpenAI did to build Canvas, another announcement they made last week and a major move for the company into the AI coding IDE space.

Shortly after Canvas was announced, Karina Nguyen, a researcher at OpenAI shared a bit more about how they built the Canvas model…and they did it, rapidly, and completely with synthetic data. Here’s her tweet below, you know the drill, click to do a deep dive.

Microsoft’s completely new (free) GenAI course

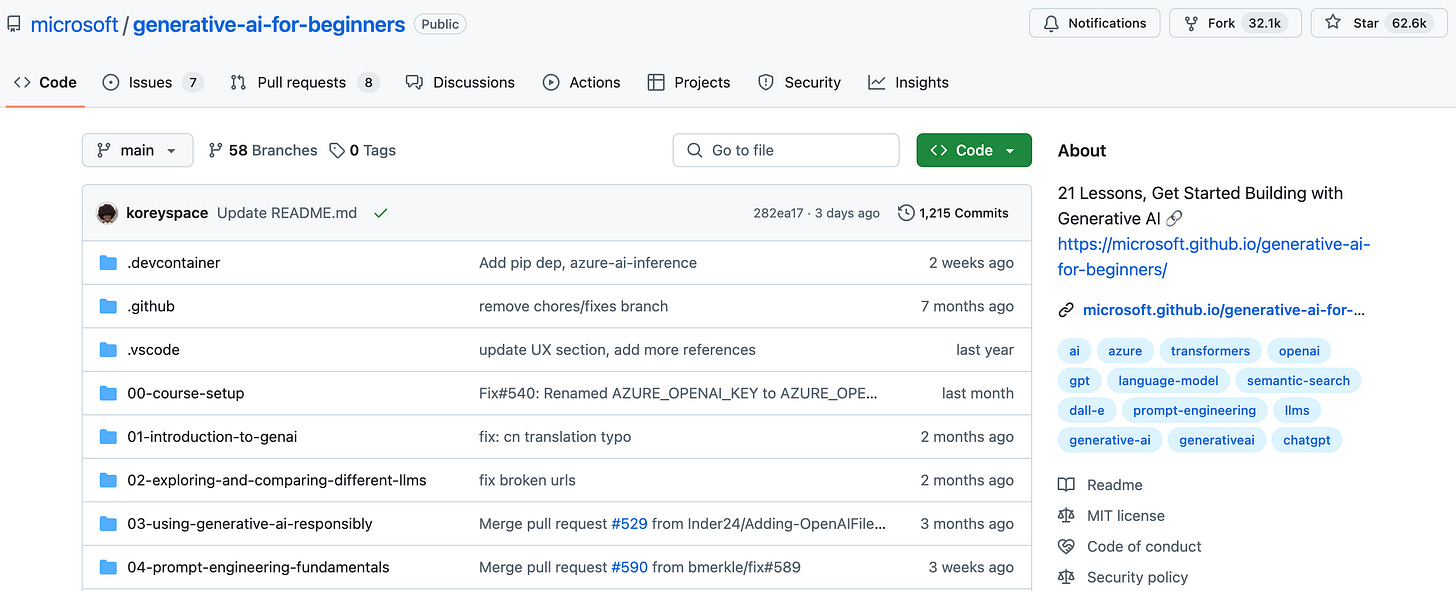

For those who missed it, Microsoft released a pretty extensive Generative AI course on Github. While they initially introduced the course a few months ago, three weeks ago they seem to have really beefed it up.

With companies like Microsoft essentially Open Sourcing AI education like this I do have to wonder if less devs are going to use Coursera to dive into GenAI? I also think it’s pretty interesting that Microsoft decided to share this through Github, with over 62,000 starts it’s clear a lot of people are digging it!

With Canvas out, what’s the skinny on Cursor?

Within hours of OpenAI announcing Canvas people started to already being to call it the “Cursor Killer,” but I still think it’s still way too early to jump to any conclusions.

Of course that didn’t stop people on Twitter/X from speculating that OpenAI is likely getting into the AI coding IDE space, which is a space where Cursor is the clear leader.

My personal take is that Cursor will be fine, so many developers have been using VS Code for years and have everything dialed in. Cursor, as a fork of VS Code allows so many devs to easily transition to AI coding vs. Canvas which is going to require a completely new workflow.

That being said, Canvas is pretty interesting, and over time, especially with over $6B in new funding, anything is possible.

Okay, that’s it for this week - I just got a warning from Substack that my post is too long to save…so I guess that’s it for this week. Thanks for reading and as always, keep calm and reason on 🧘♂️