The Reasoning Models Substack Issue #6

Grok 3 comes out tonight, GPT-4.5 and 5 is coming, Perplexity launches Deep Research, Fine-tuning DeepSeek R1 on your local machine

Hello and welcome to the sixth issue of the Reasoning Models Substack and wow, a lot has happened since my last issue. To say the reasoning models space is heating up would be an incredible understatement, it’s on fire, and there are so many changes happening each week it’s giving me no shortage of topics to cover.

This past week three major announcements were made in the Reasoning Models world - Elon announced Grok 3 is coming (tonight at 8pm PST), OpenAI announced their new model, GPT-5 is on the way, and Perplexity jumped into the Deep Research game with their own offering…also called Deep Research. That’s right we now have Google, OpenAI, and Perplexity all with products that have the same name, not confusing at all right 🤪

Also, I’m going deeper myself with this Substack. In each issue I’m really going to make an effort to go a step further, beyond the news, to help people who want to understand how Reasoning Models work. There’s nothing like the feeling of running your own LLM, and optimizing your own LLM, specifically for what you want to do with it, that’s where the real magic happens.

Okay, enough preamble, let’s dive in.

Grok 3 comes out tonight

Today is a big day, Grok 3 comes out tonight and it’s safe to say this is going to make this week more than a little exciting. There’s a lot of excitement around the new release of Grok with speculation that it will out-perform the latest-and-greatest from OpenAI.

I think Mario’s tweet captures the intensity of this moment, and while you might laugh at first, a real head-to-head battle is taking place here. Last week Elon Musk made a bid to buy OpenAI which Sam Altman scoffed at, now Elon’s upping the ante by releasing a model that could just steal marketshare away from the current AI leader.

For me, writing the Reasoning Models Substack, I don’t take sides, instead I let the models do the talking, and yes - I’m pretty excited to take Grok 3 for a spin.

Grok 3 launches tonight at 8pm PT, Elon’s calling it the “Smartest AI on Earth,” safe to say if you’re up at 8, you’ll probably want to tune in.

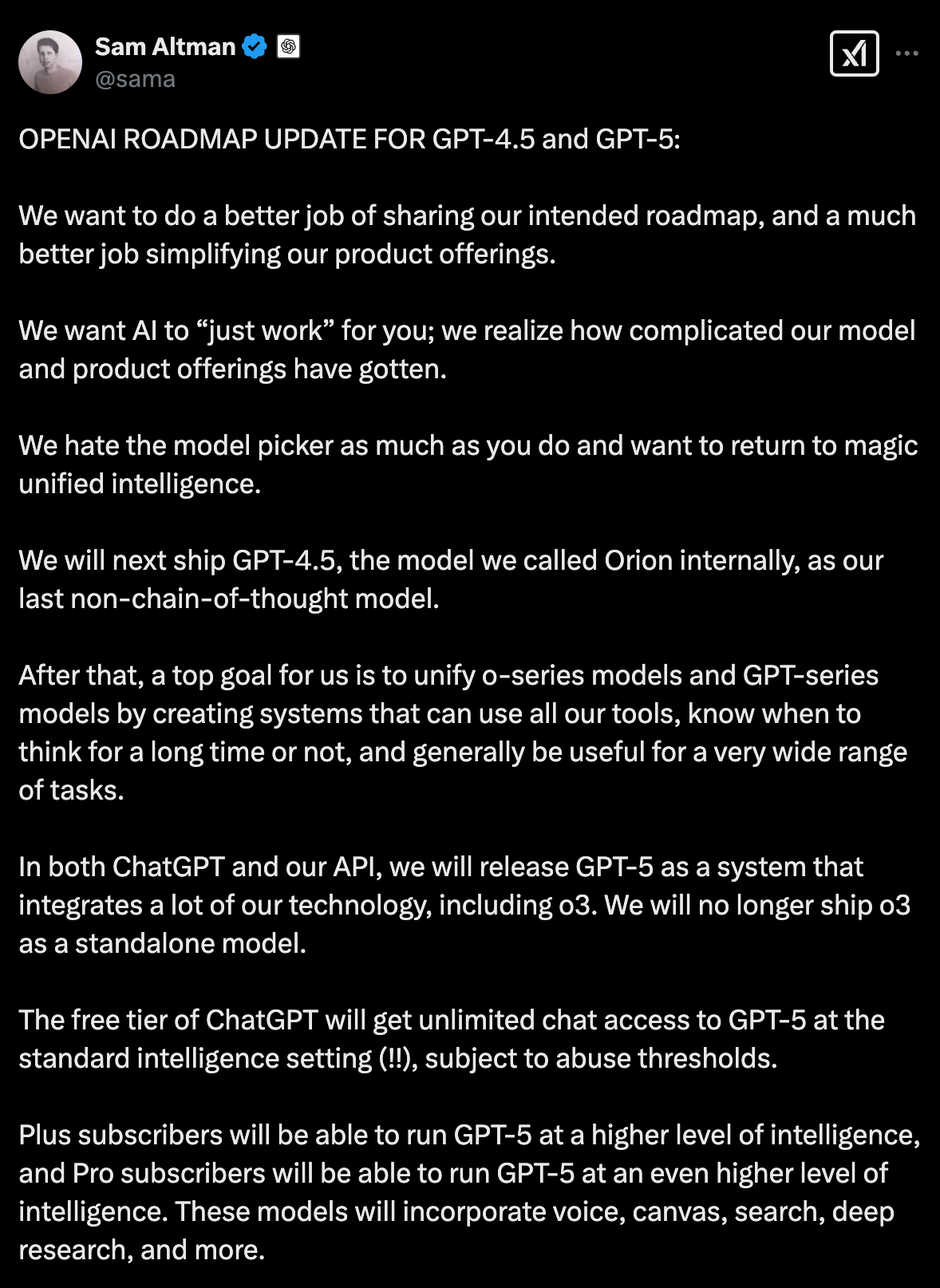

Sam Altman announces plans for GTP-4.5 and GPT-5

On the other side of the battle for AI supremacy, Sam Altman shared a lot last week, likely a good strategic move before Grok 3 steals the show for the next week.

Here’s the highlights:

OpenAI is releasing GPT-4.5 next

This model was originally called Orion but, yeah - OpenAI has some weird product naming issues so rather than sticking with a cool name, it’s going to be called GPT-4.5

This will be their last non chain-of-thought (i.e. reasoning) model

After that OpenAI plans to unify their models under GPT-5, their newest reasoning model which will include all the models that came before it

With this announcement also comes the end of o3 as it’s own standalone model, it will be built-into GPT-5

As usual, all the good stuff going to Plus and Pro subscribers with each tier getting a more high-octane version of the model

Perplexity launches Deep Research

So yes, Google was the first company to come out with a product called Deep Research, then OpenAI stole the show releasing a product, also called Deep Research, and now - Perplexity has announced a new product…called Deep Research.

This makes it official, the Deep Research war is on as three of the biggest companies in AI duke it out to put PhD-level researchers in our pocket.

Here’s the dets from Perplexity in their announcement:

Today we’re launching Deep Research to save you hours of time by conducting in-depth research and analysis on your behalf. When you ask a Deep Research question, Perplexity performs dozens of searches, reads hundreds of sources, and reasons through the material to autonomously deliver a comprehensive report. It excels at a range of expert-level tasks—from finance and marketing to product research—and attains high benchmarks on Humanity’s Last Exam.

We believe everyone should have access to powerful research tools. That’s why we’re making Deep Research free for all. Pro subscribers get unlimited Deep Research queries, while non-subscribers will have access to a limited number of answers per day. (Source - Perplexity Blog)

This week I’m planning on doing a little head-to-head pitting OpenAI’s Deep Research against Perplexity’s to see how they compare, so expect to see those results in the next issue!

Fine-Tuning DeepSeek R1 Locally

Like I said in my little opening segment above, I want this Substack to go beyond the news and actually share some fun stuff you can do running LLMs on your local machine. Let’s face it, one of the best ways to learn more about something is to go under-the-hood and make some tweaks and changes yourself.

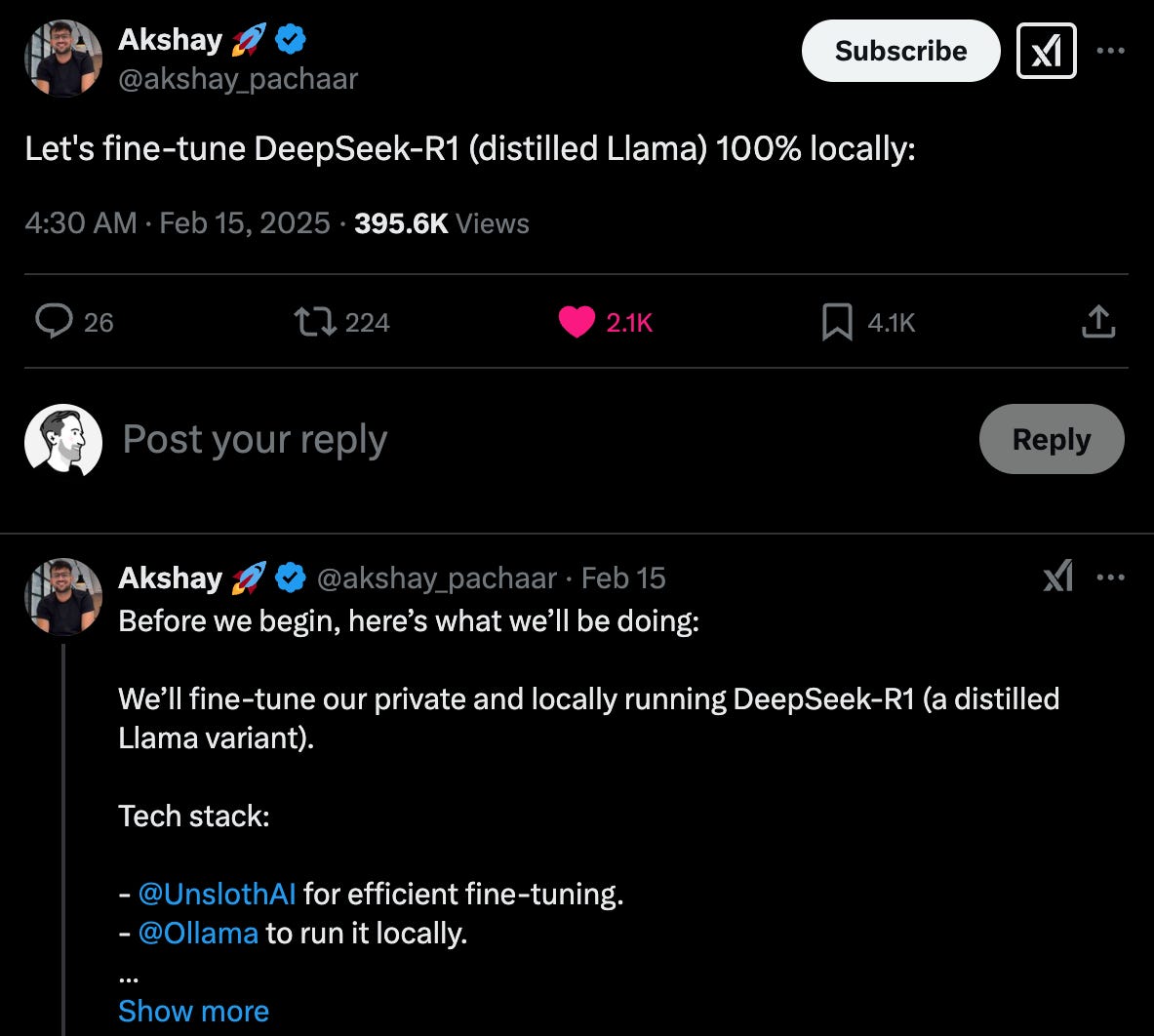

And IMHO, one of the best models to tinker with right now is DeepSeek R1 given that it’s an incredibly powerful model and completely Open Source. One of the best educators in AI space right now is Akshay Pachaar and two days ago he wrote a great tweet about how to fine-tune DeepSeek R1 on your local machine 🐳

The tech stack for this is pretty simple to get started with, you’ll start with Ollama to run the model locally and then jam with Unsloth to do the tuning itself.

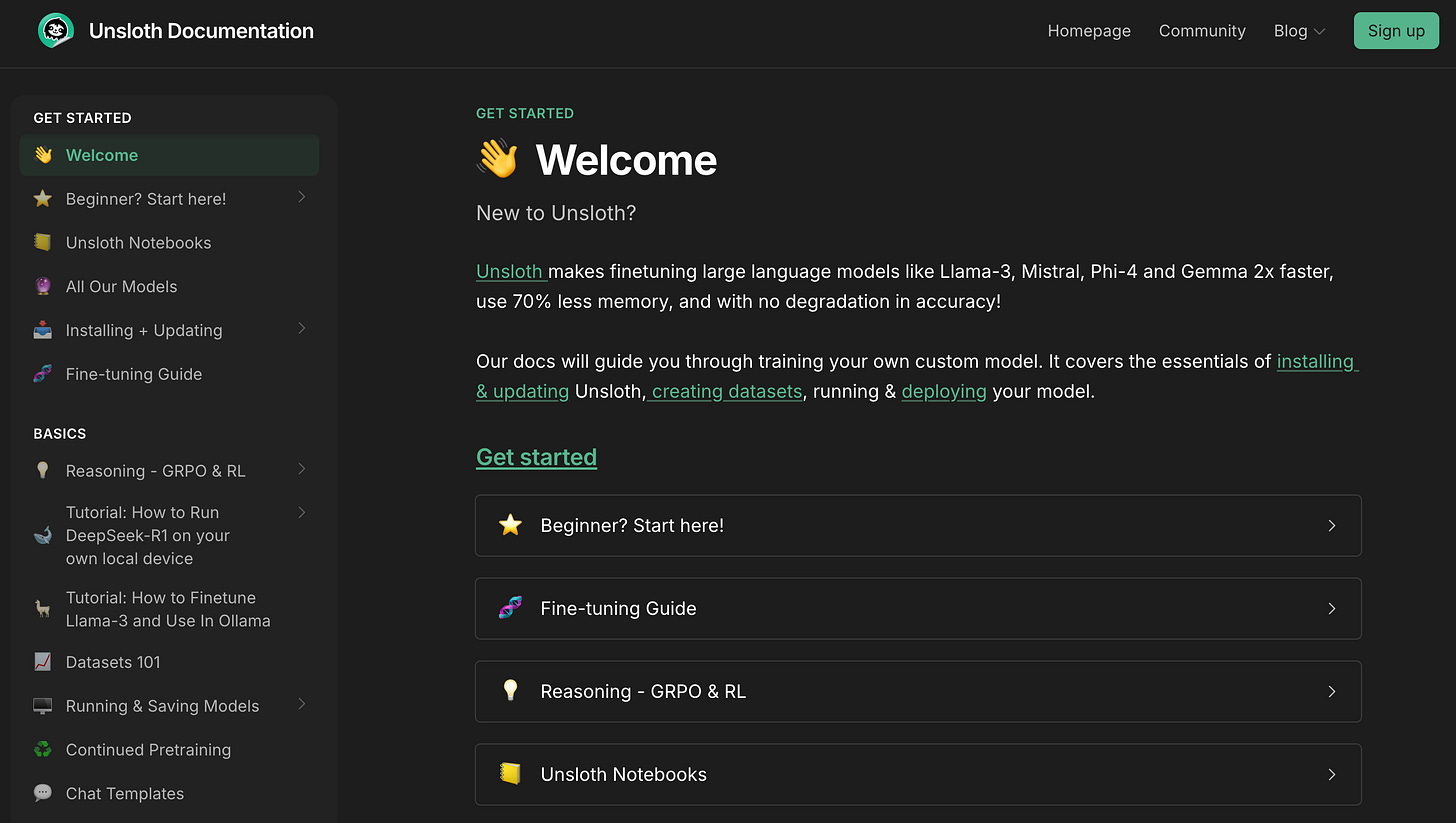

Oh and if you haven’t heard of Unsloth, it’s pretty darn cool and they have some of the best docs I’ve ever seen, seriously - go check out their docs.

While you can dive right into Ashkay’s tweet and start tuning away, I do recommend reading the “Fine Tuning Guide” section of the Unsloth docs so you can have a bit more background before you get started.

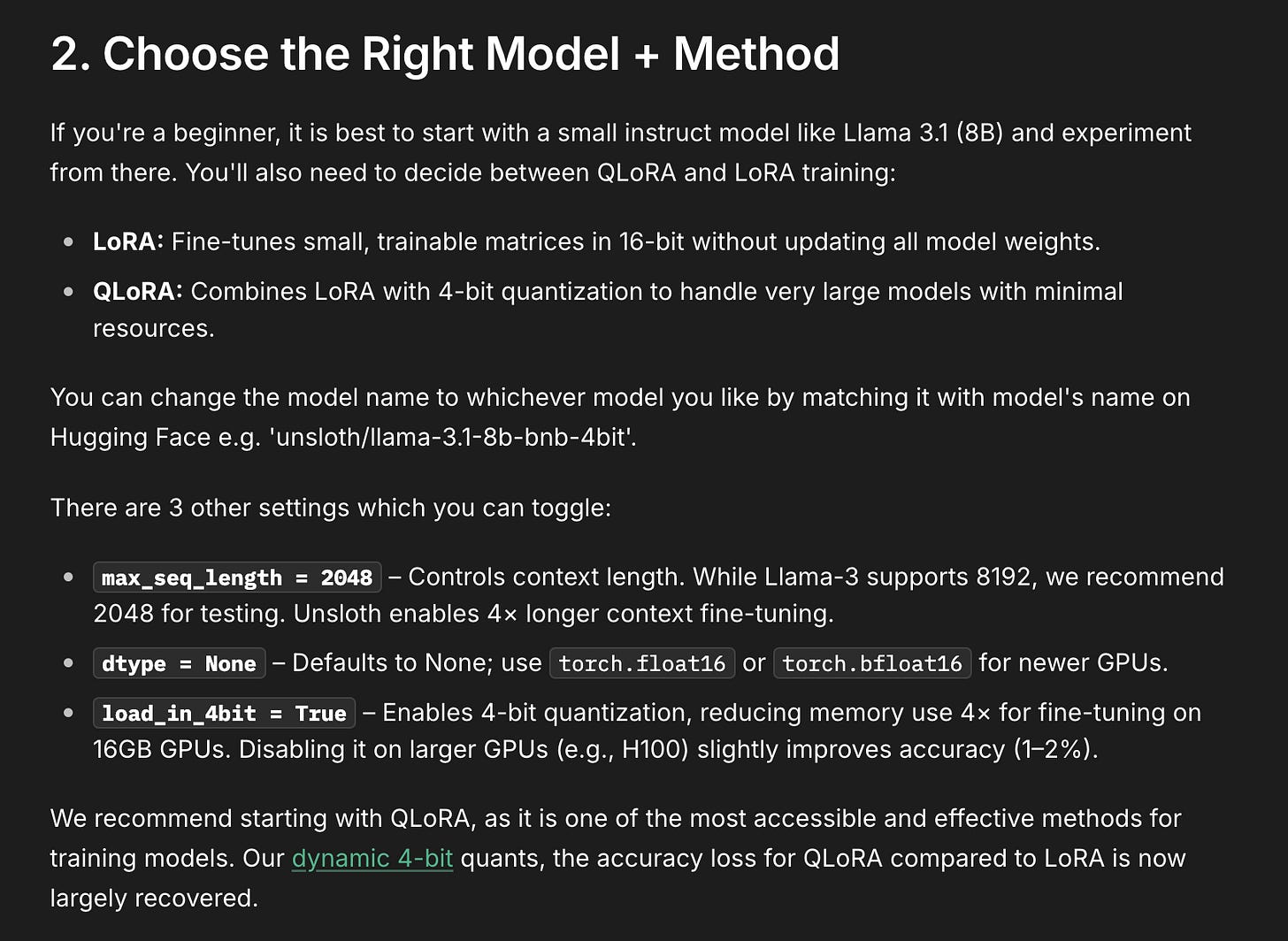

But if you’re too lazy to do that, just read this so you can understand the difference between LoRA and QLoRA.

Have fun and happy tuning!

OpenThinker - another Reasoning Model you should know about

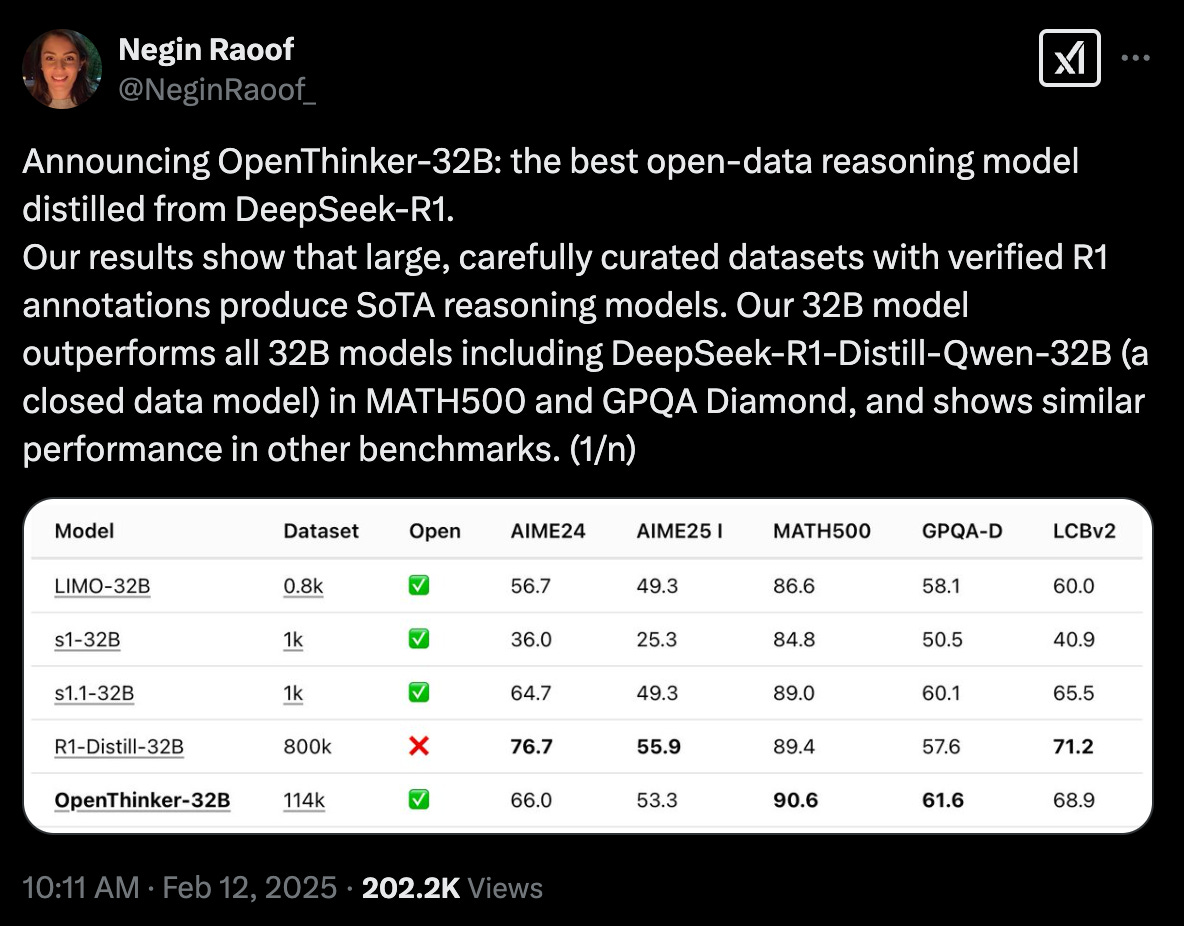

While you can fine-tune DeepSeek yourself following the path I shared above, you can also not do that and instead use a model tuned by the pros. And that’s where OpenThinker-32B comes in, one of the best open-data reasoning models out there, distilled from DeepSeek R1.

Here’s the dets:

As you can see from the table in the tweet, OpenThinker-32B actually out-performs R1-Distill-32B in MATH500 and GPQA-D, and it’s completely open.

So how did they do it?

We train OpenThinker-32B on the same OpenThoughts-114k dataset as our earlier model OpenThinker-7B. Using DeepSeek-R1, we collected reasoning traces and solution attempts for a curated mix of 173k questions. We are now releasing this raw data as the OpenThoughts-Unverfied-173k dataset. The last step in the pipeline is filtering out samples if the reasoning trace fails verification. The full code which we used to construct our dataset is available on the open-thoughts GitHub repository. (Source - OpenThoughts Blog)

I think it’s beyond awesome that the team at OpenThoughts shared the full code that they used to build their dataset. This is a great way for anyone who wants to learn how to really tinker away with models to do so by seeing some of the best in action.

Bonus content: Repo Prompt CodeMap Update

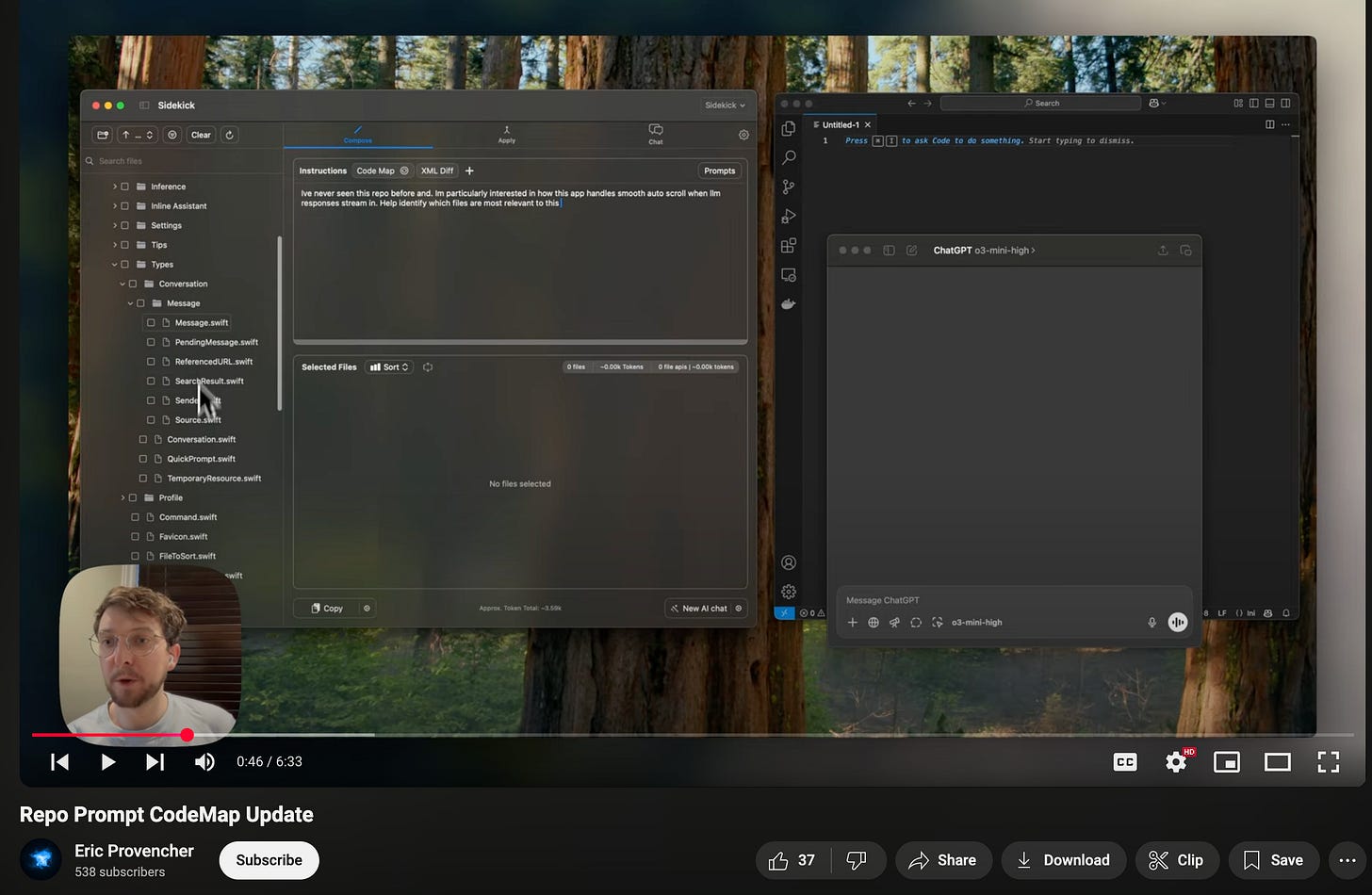

Lately I’ve been really digging a cool new tool called Repo Prompt from Eric Provencher, a research scientist at Unity. The TLDR; on this tool is:

an app designed to remove all the friction involved in iterating on your local files with the most powerful language models

Last week Eric released a new update that I really dig and thought was worth putting in this issue of my Substack. Here’s a bit more about it:

The codemap update just shipped and it's the start of much smarter project awareness features in Repo Prompt. Give this a watch to understand how you can leverage automated reference detection to reduce hallucinations with the best language models, and reduce the burden involved in building context for your prompts.

And the best way to see this feature in action is with the You Tube video below that Eric released four days ago ⬇️

Right now Repo Prompt’s Mac App is in beta so you can use it for free, or if you use a PC, join the waitlist and use it once the Windows version becomes available. Either way, I think Eric’s working on some really neat stuff here and I’m excited to continue to see it develop!

And that wraps up Issue #6 of the Reasoning Models Substack, thanks for reading and I’ll see you next time.