The Reasoning Models Substack - Issue #8

OpenAI's Studio Ghibli craze, Google Gemini 2.5 takes on Claude, and Amazon announces Nova

Hello, happy Tuesday, and welcome to the Reasoning Models Substack ☀️

A lot happened in the AI world last week, and the heat is on when it comes to models that software engineers are using for coding. For those keeping score, Anthropic stole the show last summer with Claude 3.5 Sonnet.

Then OpenAI jumped into the mix with o1-mini followed by o3-mini at the end of last year. Of course, as things tend to go in the foundational modeling space, Anthropic quickly leaped ahead, again, with Claude 3.7 Sonnet.

Over the last year, Google hasn’t really been in the mix when it comes to coding, until now, but with Gemini 2.5, that might all be changing.

Oh and, now Amazon has arrived. Yes, it’s enough to make your head spin, but rather than spinning out of control, let’s do a deeper dive so you can learn how to turn all these amazing new models into your new superpower 🦸♂️

As always, my goal is to keep each issue of the Reasoning Models Substack short and sweet so you can get to the good stuff and back to your day, so with that, grab your coffee and let’s dive in!

OpenAI just had it’s Studio Ghibli moment

Last Tuesday Sam Altman shared this tweet announcing Chat GPT 4o’s new image generation capabilities.

And as you can probably notice, there’s an image in the tweet, and for the Studio Ghibli fans out there, like me, you’ll probably recognize the style. Well turns out you aren’t alone, a lot of people immediately noticed - holy moly, GPT 4o can generate Studio Ghibli-style images, and insanely well.

This started an absolute non-stop Studio Ghibli imagefest on X with just about every popular scene from TV Shows like Severance, or news events finding their way to Studio Ghibli-style images.

This turned out to be so insanely popular, the OpenAI team could barley keep up. Sam even had to come onto X to tell everyone to slow down given that their new image gen feature was creating “biblical demand” 🤯

Of course, now that a week has passed, OpenAI has thing in a good place, so much so that Sam announced yesterday that image gen is now available to all free users.

So if you didn’t get a chance to see all of your favorite family photos or vacation adventures in all their Studio Ghibli glory, now is your chance. Just note that you can’t explicitly say you want the image to be in Studio Ghibli style, you’ll need to say something like Japanese animation style image.

Gemini 2.5 is here, and it’s really freakin’ good

The same day that OpenAI announced Chat GPT 4o image gen, Google Announced Gemini 2.5 because in the foundational modeling world, we just can’t have one announcement in a single day.

And I’m pretty darn excited about Gemini 2.5 because it represents the next evolution of reasoning models, or at least, Google’s approach to reasoning models, here’s the skinny from Koray:

Gemini 2.5 models are thinking models, capable of reasoning through their thoughts before responding, resulting in enhanced performance and improved accuracy.

In the field of AI, a system’s capacity for “reasoning” refers to more than just classification and prediction. It refers to its ability to analyze information, draw logical conclusions, incorporate context and nuance, and make informed decisions.

For a long time, we’ve explored ways of making AI smarter and more capable of reasoning through techniques like reinforcement learning and chain-of-thought prompting. Building on this, we recently introduced our first thinking model, Gemini 2.0 Flash Thinking.

Now, with Gemini 2.5, we've achieved a new level of performance by combining a significantly enhanced base model with improved post-training. Going forward, we’re building these thinking capabilities directly into all of our models, so they can handle more complex problems and support even more capable, context-aware agents. (Source - Google Deep Mind)

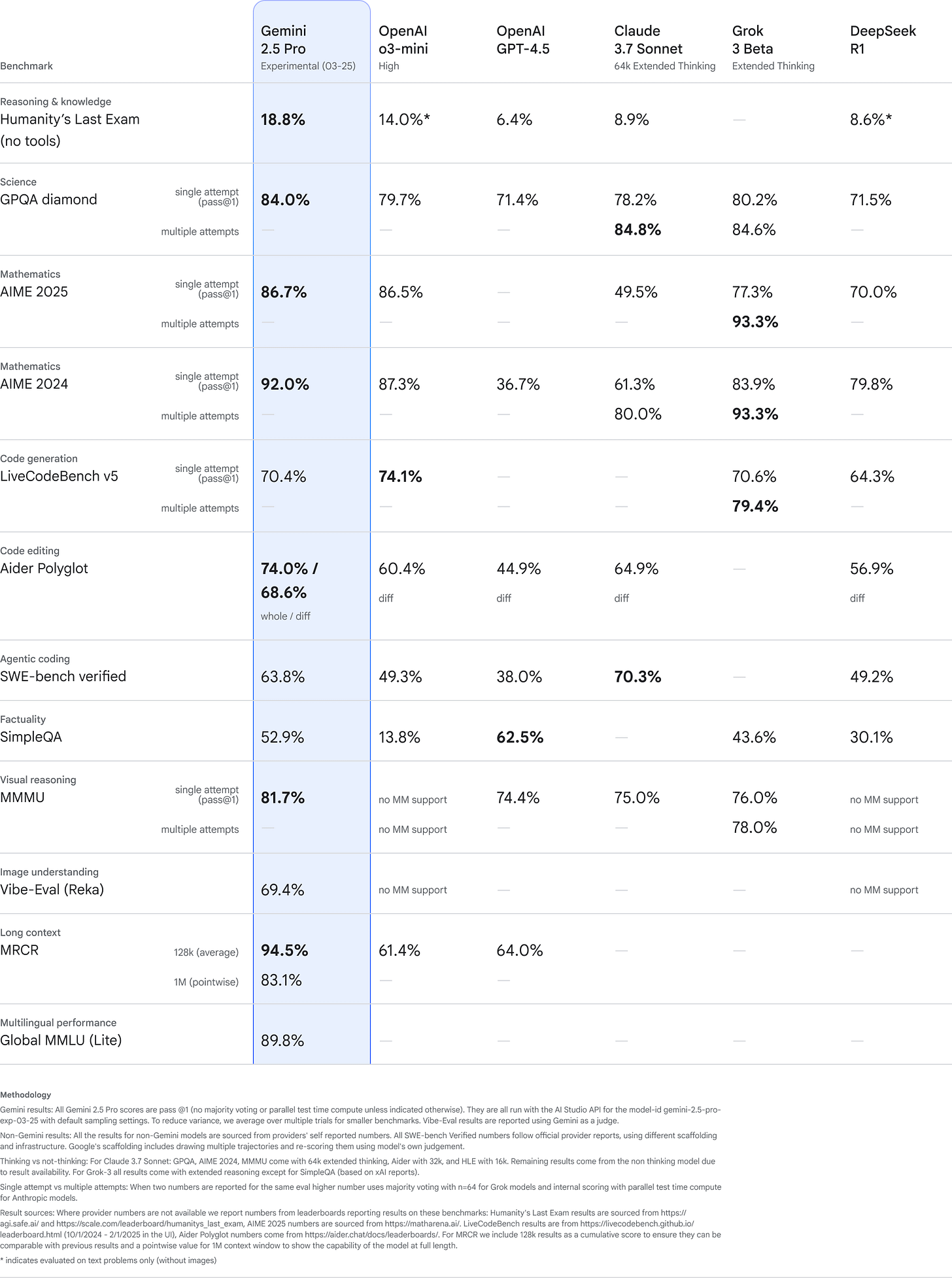

The benchmarks for Gemini 2.5 are pretty insane, just take a look for yourself, it crushes both GPT 4.5 and Claude 3.7 Sonnet 64k extended thinking in a lot of areas.

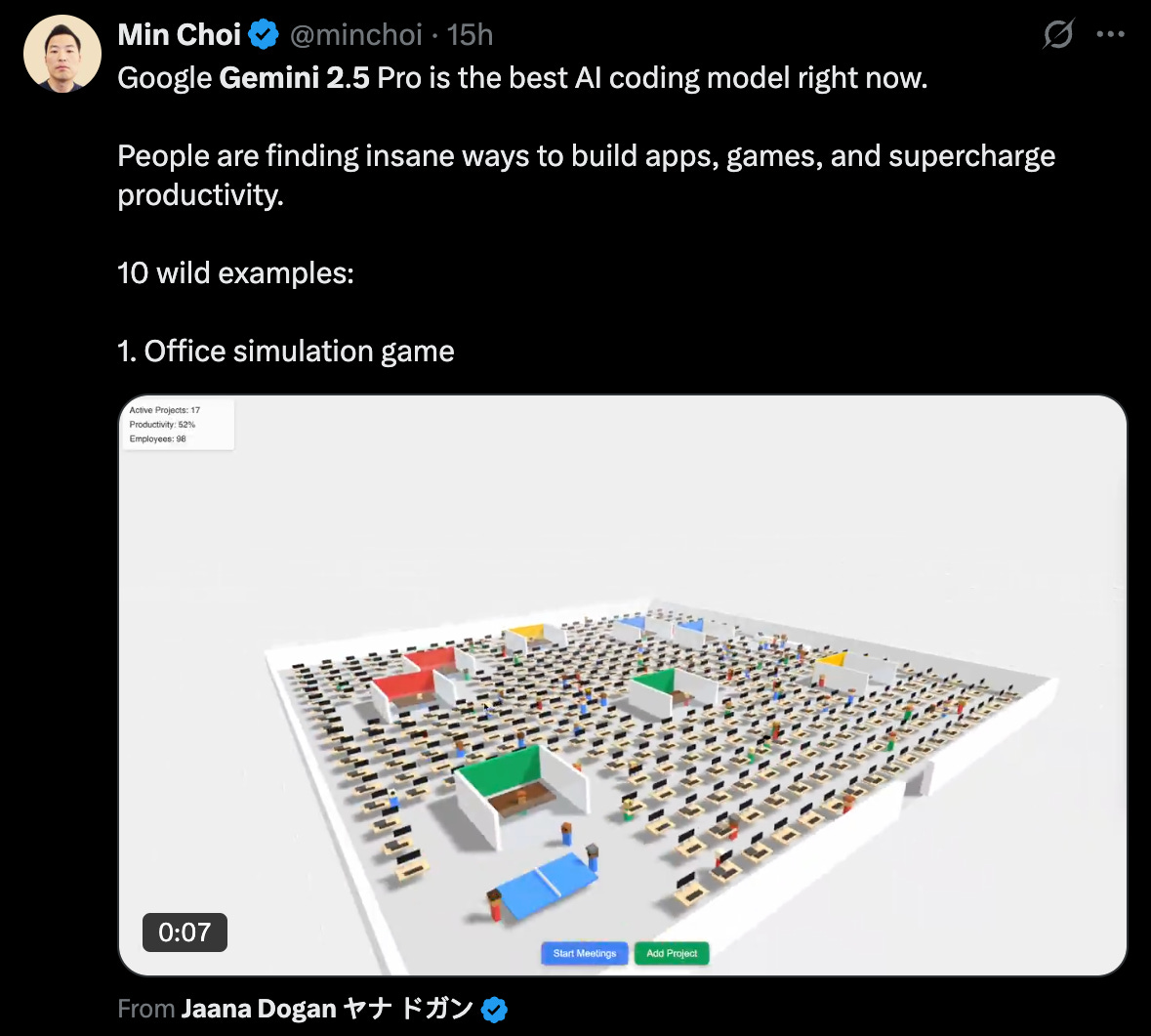

The dev community on X seems to be really enjoying the new model with some claiming it is now the best model out there for coding.

So…if you haven’t used Gemini 2.5 yet, what are you waiting for? Seriously, Google really cooked with this one 🔥

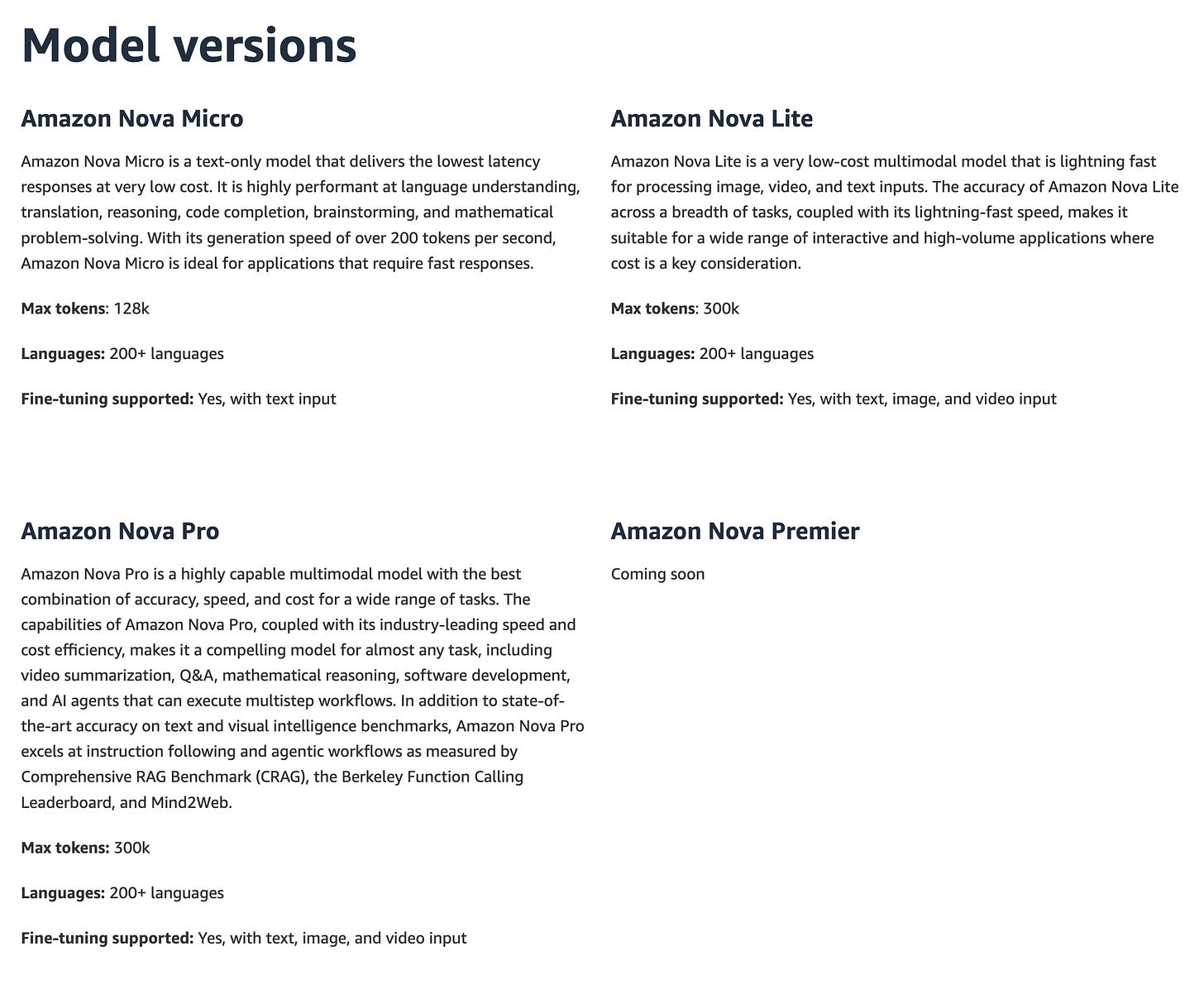

We all knew this day was coming, and yes - it’s here, Amazon is joining the big dogs in the foundational modeling game with Nova. And what makes Nova pretty interesting is that it can take in action in a web browser like OpenAI’s o1 Operator can…but Operator is only available to people paying OpenAI $200/mo.

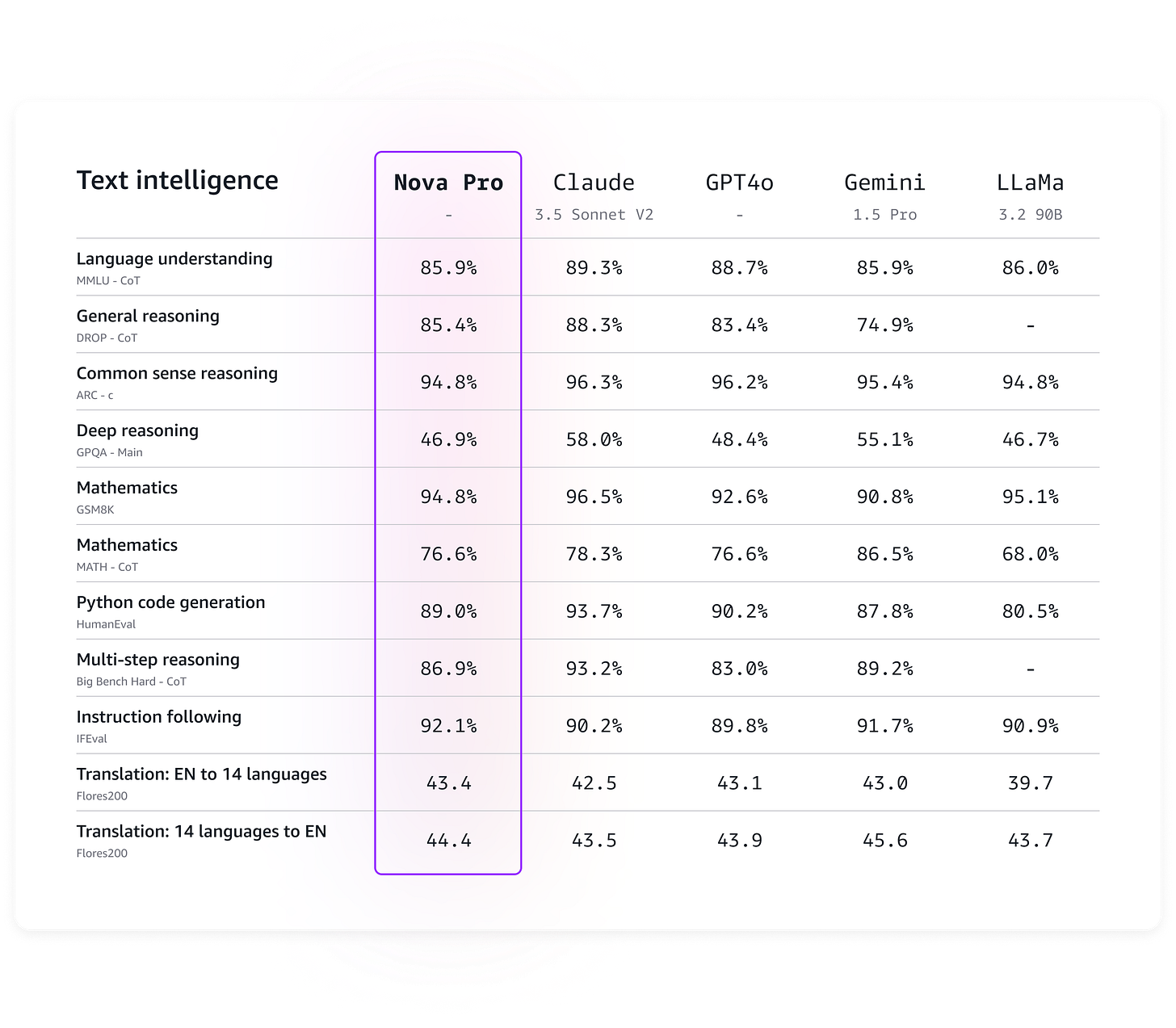

While that’s exciting, it’s hard to ignore the fact that Nova does lag behind all the other foundational models it competes against. I also find it a bit odd that Amazon decided to benchmark against GPT 4o and Claude 3.5 rather than GPT 4.5 and Claude 3.7 which is what Google is benchmarking against, and really everyone should since those are the latest models from OpenAI and Anthropic.

So this chart is a bit puzzling, it’s like Amazon is bragging about their model not performing quite as well as the last generation models from Anthropic and OpenAI 🫠

That being said, Amazon is just getting into the game and given the amount of engineering horsepower they have, I think it’s safe to say this is just a starting point, there’s a lot more to come.

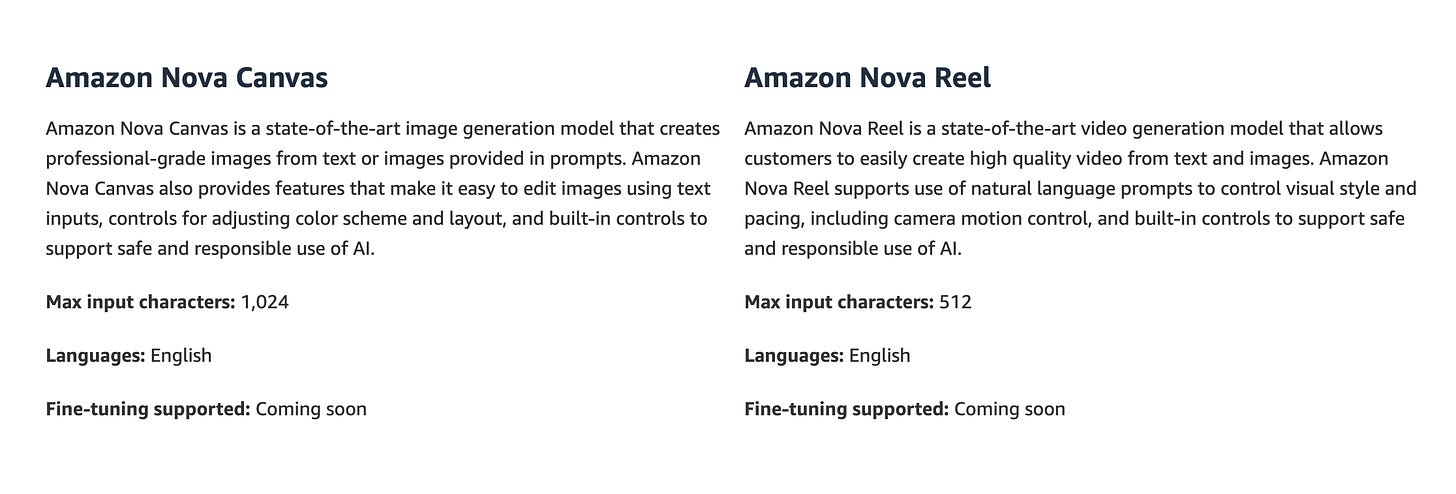

In classic Amazon fashion, they also released a lot of stuff all at once, not just one model but six…

I’m definitely interesting to see how this develops and now that I’ve used Gemini 2.5 for a while, it’s time for me to switch gears to Nova and take it for a spin, which is my plan for this week.

Okay, and that’s a wrap. Done with your coffee? Go get a fresh cup and move forward with your day a little be more in the know about what’s going on in the world of Reasoning Models.

As always, thanks for reading and if you enjoyed this please share my little Substack far and wide if you like it!

Until next time.