The Reasoning Models Substack Weekly Recap - 2.9.25

OpenAI launches Deep Research, Lex does a five hour podcast and has this startup just fixed RAG's biggest problem

Hello, happy Sunday, and welcome to The Reasoning Models Substack, and a recap of the last week, which in many ways feels like a year’s worth of innovation, jammed into seven days.

The AI world is moving fast, and at the center of it all is reasoning models and the race to make the best, most performance, most cost-effective reasoning model on the planet - and the heat is on 🔥

So let’s dive in and talk about everything that happened this week, starting with what is likely the biggest new release in the world of reasoning models, yes, I’m talking about Deep Research from OpenAI.

Deep Research, the OpenAI version (not to be confused with the Google version)

Deep Research was launched exactly one week ago and the response has been pretty stellar with some incredibly savvy people trying it out and giving it their seal of approval.

Most people compare Deep Research to, well, as the name implies, having an actual PhD-level researcher conduct research for you. Except unlike a human, this researcher has access to an almost limitless amount of data and reasoning abilities that make it insanely powerful.

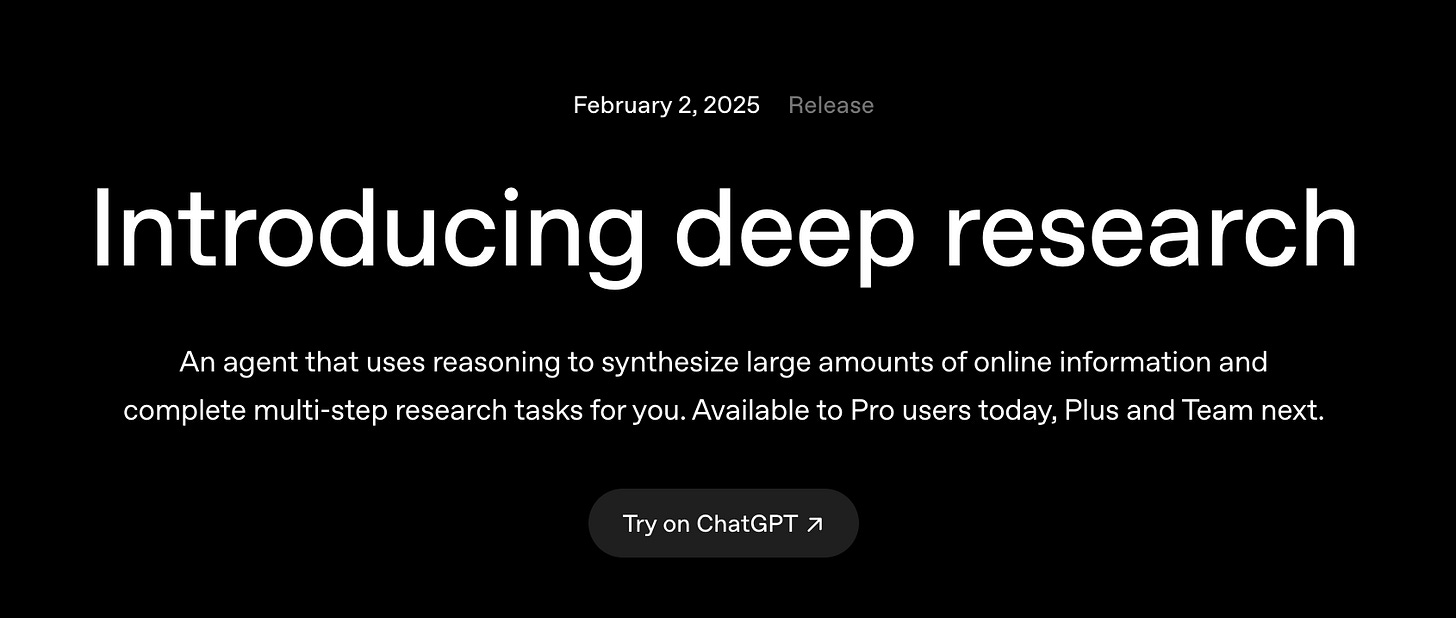

I think Sam’s post announcing it hit the nail on the head in the last sentence, “can do tasks that would take hours/days and costs hundreds of dollars,” but honestly - I think we’re quickly seeing this is an incredible understatement. It seems more realistic to say that Deep Research can take tasks that would take years and hundreds of thousands of dollars, and do them in a matter of days or weeks for thousands of dollars, or less.

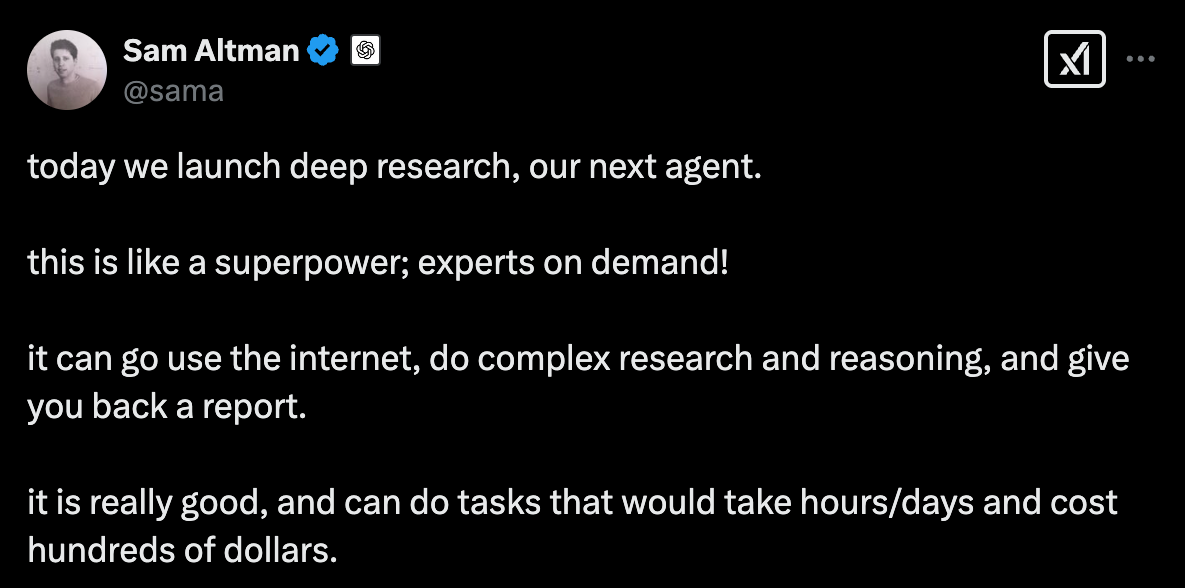

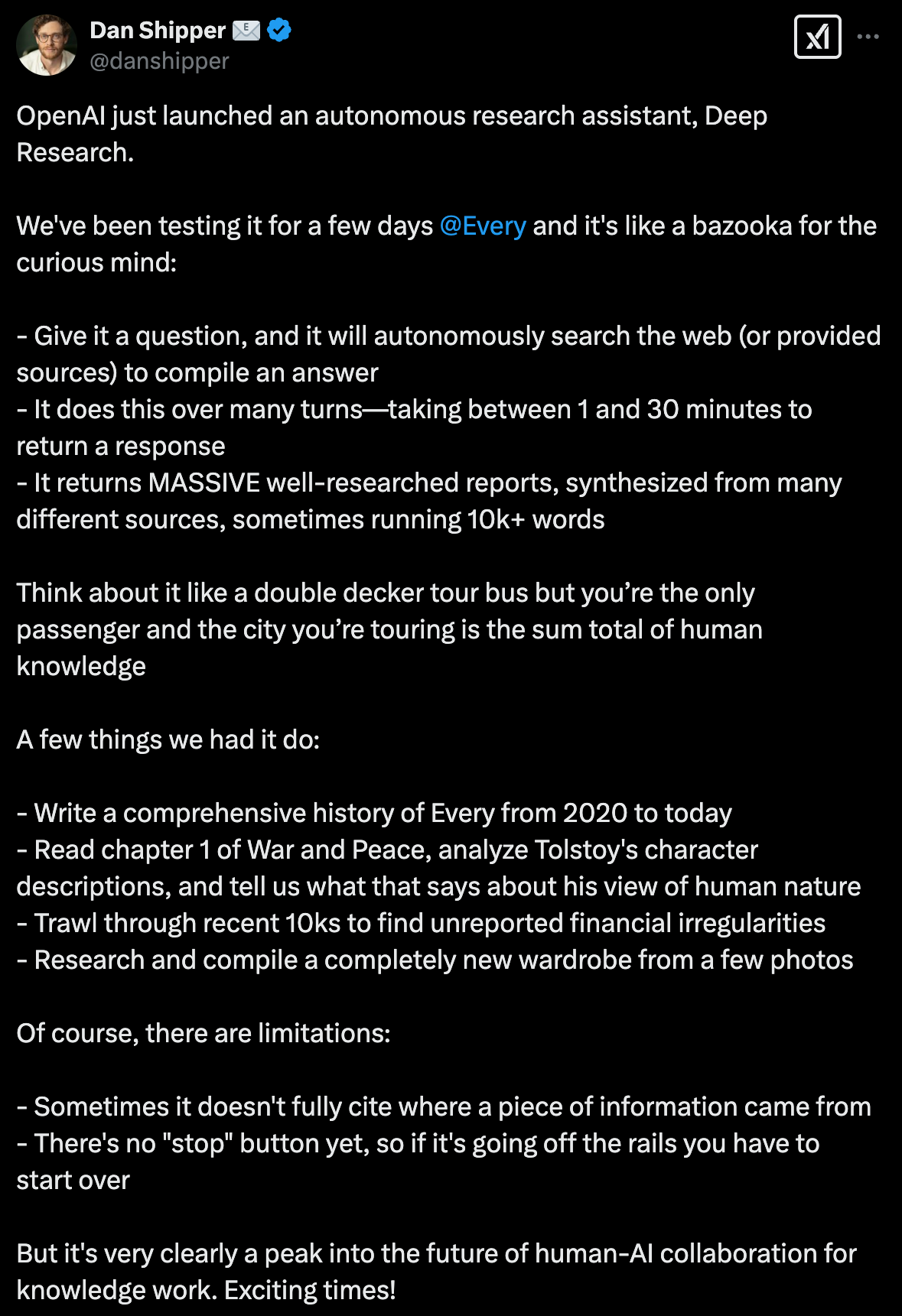

Companies that had early access to Deep Research shared their experiences with the new model online after the release - my fav was this one from Every founder Dan Shipper.

Of course I should probably address the elephant in the room 🐘

Google has a reasoning model called, drumroll please - Deep Research. So yes, OpenAI literally just took the exact same name that Google gave their product, and used it for theirs.

It’s like if someone came out with a competitor to Photoshop and called it Photoshop. Bold move, but if anyone can pull it off, it’s OpenAI.

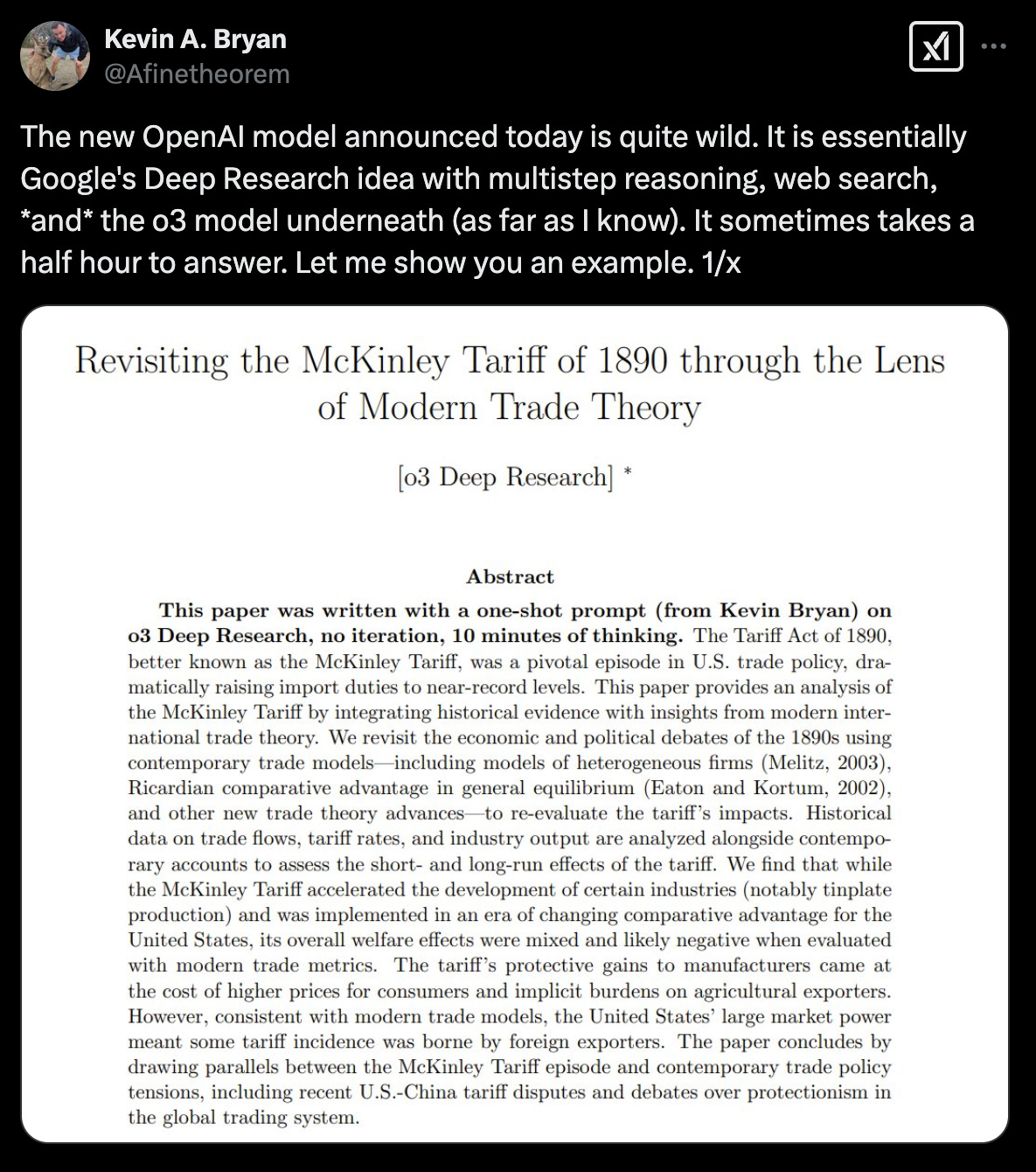

That being said, just by mirroring the name, OpenAI isn’t creating the exact same thing, there are key differences, and seemingly advantages, to OpenAI’s version of Deep Research. This tweet from Kevin Bryan does a great job breaking it down.

Okay, that’s the TLDR; and maybe a bit more on Deep Research, I would highly recommend you read OpenAI’s official announcement if you want to dive deeper.

Lex dropped a five hour podcast about DeepSeek, OpenAI, Google, Anthropic and a whole lot more

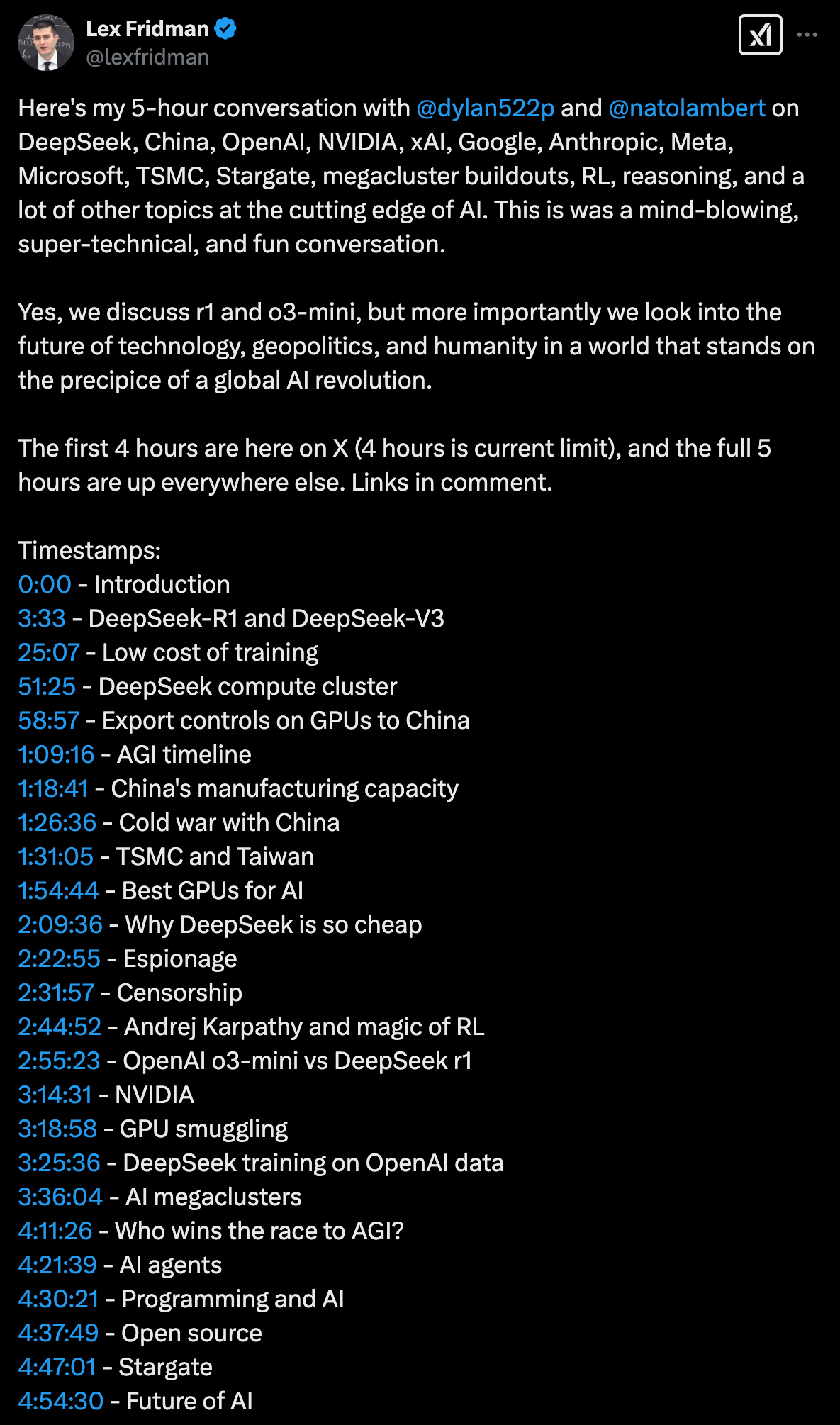

With so much going on in the AI world right now it’s not surprising that the one and only Lex Fridman decided to drop what I think we can aptly call a mega podcast episode.

While it is five hours long, there’s probably no way you could learn this much about the current state of AI, in just five hours, than with this episode from Lex.

I won’t write much more here because honestly, Lex lays it out pretty clearly so you know every single topic he covers and where to find it. While you might not have time to listen to all five hours, if there’s a topic on there you want to learn more about, you know where to find it.

Ready to watch/listen - here’s the You Tube link.

Has this startup just fixed RAG's biggest problem?

Okay, so now let’s talk about RAG and one of the biggest problems facing anyone who builds RAG models - extracting text from PDF files. This is a massive pain point and something that makes it much harder to actually get the data you want into your RAG system, but this pain train might be a thing of the past thanks to a new startup - Chunkr.ai.

The one sentence above explains what Chunkr does pretty darn well - it’s an API service to convert complex documents into LLM/RAG-ready chunks. My timeline on X has been flooded with posts about Chunkr and it makes sense, it solves a massive problem, and it’s doing it via an Open Source model.

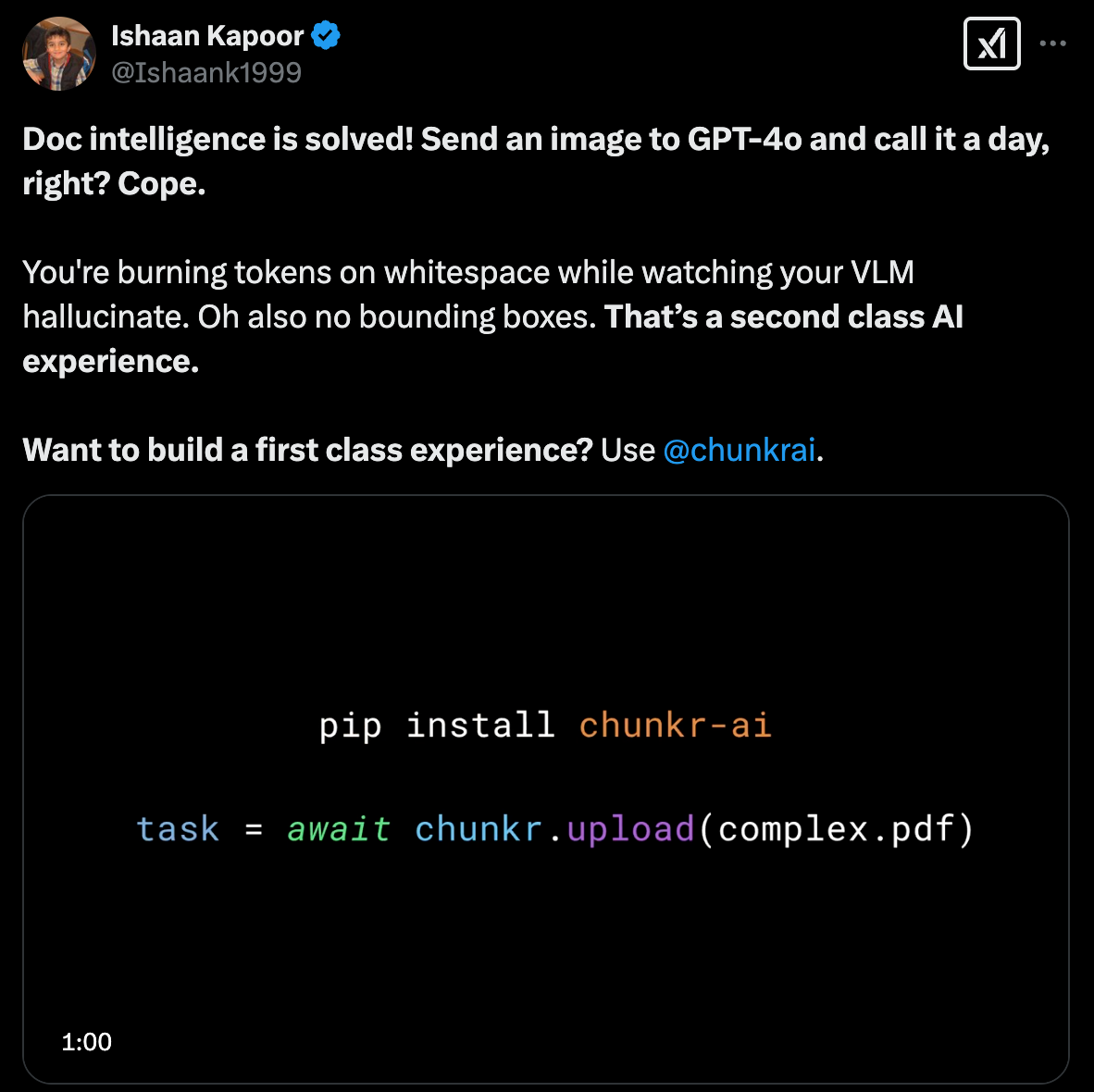

I thought this post from Ishaan was pretty hilarious and highlights the challenge people have when they’re building RAG models today. You can’t just send an image to GPT-4o and call it a day, in fact, you can’t send an image or PDF anywhere and expect to easily convert the data in that file into an LLM-ready format, until now of course.

So what jobs are LLMs going to replace?

Okay, so now moving away from the AI engines themselves, let’s go back up to 30,000 feet and talk about the impact LLMs are going to have on the world, and the job market specifically.

Last week someone asked Deep Research (I think Min was the prompter behind it but I’m not sure) what jobs they think will be replaced by AI, here it is, and yes, it’s big.

The best LLM deep dive I’ve seen yet

Okay so last but not least, I was pretty blown away last week with a new LLM deep dive that Andrej Karpathy released. Seriously, this is probably one of the best deep dives I’ve ever seen and if you really want to know what’s going on under-the-hood in an LLM, there’s probably no faster, better way to learn it, than watching this.

Bonus round

I’m going to try something new at the end of each post, I’m calling it “bonus round” and it’s essentially just a few links to posts on X about reasoning models that I found in the last week, and thought were pretty darn interesting, but didn’t cover in detail in this issue.

So here it goes, if you want to really geek out, your bonus content is below:

Make your RAG 20x smarter - https://x.com/akshay_pachaar/status/1886396550089511119

Train your own reasoning model with Unsloth - https://x.com/UnslothAI/status/1887562753126408210

Deep Agent R1-V - https://x.com/liangchen5518/status/1886171667522842856

Okay, and that’s it for this week’s issue - thanks for reading and I’ll see you next week!